مد و پوشاک زنانه

مد و پوشاک مردانه

مد و پوشاک دخترانه

مد و پوشاک پسرانه

ورزشی

خانه و آشپزخانه

ویتامین و مکمل غذایی

کیف، کولهپشتی و چتر

چمدان و ساک مسافرتی

زیبایی و آرایش

عطر و ادکلن

مراقبت پوست

مراقبت مو

مراقبت پوست دست و پا

بهداشت دهان و دندان

لوازم اصلاح سر و بدن

کالای خواب

حوله و لوازم حمام

اسباب بازی

لوازم کودک

بازی کامپیوتری و کنسول

لوازم صوتی حرفهای

تجهیزات صوتی قابل حمل

دوربین فیلمبرداری و عکاسی

دوربین مدار بسته

ساعت هوشمند

غذا و لوازم حیوانات خانگی

لباس

پیراهن شلوار

تاپ، بلوز و کراپ

بارداری

سایز بزرگ

کفش

لباس

تی شرت و پلوشرت

پیراهن

کت و ژاکت

لباس خواب و حوله تن پوش

کلاه

لباس سایز بزرگ

کت و شلوار

کفش

صندل و راحتی

کیف پول، کارت و کلیپس پول

لباس

تیشرت

پلوشرت

کت و ژاکت

کفش

لباس

پیراهن

تیشرت

پلوشرت

کفش

صندل و راحتی

زنانه

مردانه

کودک و نوجوان

تجهیزات ورزشی

فوتبال

بسکتبال

تنیس روی میز

بدمینتون

دوچرخه سواری

موج سواری

ماهیگیری

بوکس و ورزشهای رزمی

کمپینگ

سوارکاری

اسکیت و اسکوتر

بیسبال

کریکت

تجهیزات محافظتی

ورزشی پزشکی

غواصی و اسنورکلینگ

اسپرت فشن و اکسسوری

لوازم فیتنس

لوازم ورزشی فضای باز

لوازم بسکتبال

لوازم فوتبال

لوازم رانینگ

لوازم برقی خانگی

اتو و میز اتو

چرخ خیاطی

لوازم برقی آشپزخانه

تجهیزات پخت و پز

قهوهساز و فومساز شیر

تجهیزات غذا سازی

لوازم تخصصی آشپزخانه و پذیرایی

لوازم سرو و نگهداری نوشیدنیها

ظروف و تجهیزات آشپزخانه

ظروف و لوازم سرو غذا

ظروف و لوازم پخت غذا

دکوراسیون داخلی خانه

خوشبوکننده و دستگاه افشانه عطر

ویتامین و ساپلیمنت

مکملهای ورزشی

مکملهای تقویتی و تنظیمی

کیف و کوله لپ تاپ

کوله پشتی

آرایشی صورت

ابرو و چشم

ناخن

ترمیمی و سرم

مرطوب کننده

لوازم مراقبت پوست

حفاظت در برابر آفتاب

مراقبت دور چشم

شامپو و نرم کننده

لوازم و مواد فرمدهی مو

مراقبت پوست سر

مواد و لازم رنگ مو

مسواک

ست کالای خواب

بالشت و پوزیشنر تخت

پتو

حوله تنپوش

بازیهای فضای باز و تجهیزات پارک

بازیهای آبی و لوازم شنا

ماشینهای سواری، سه چرخه و اسکوتر

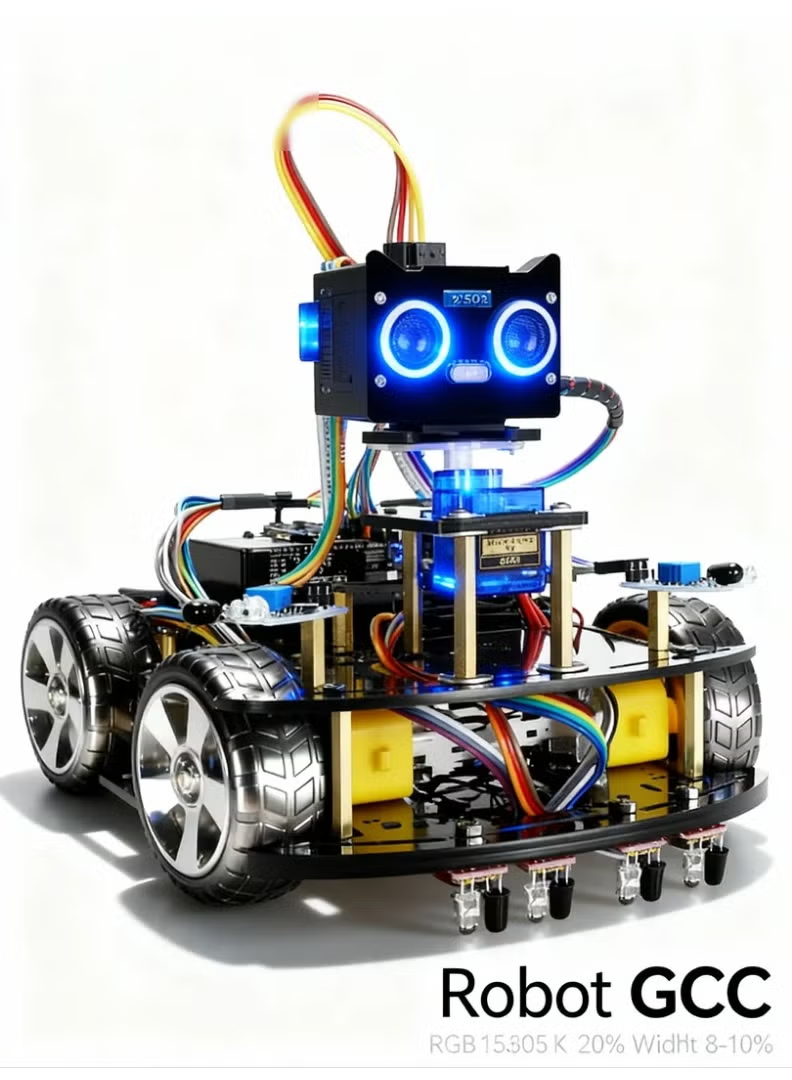

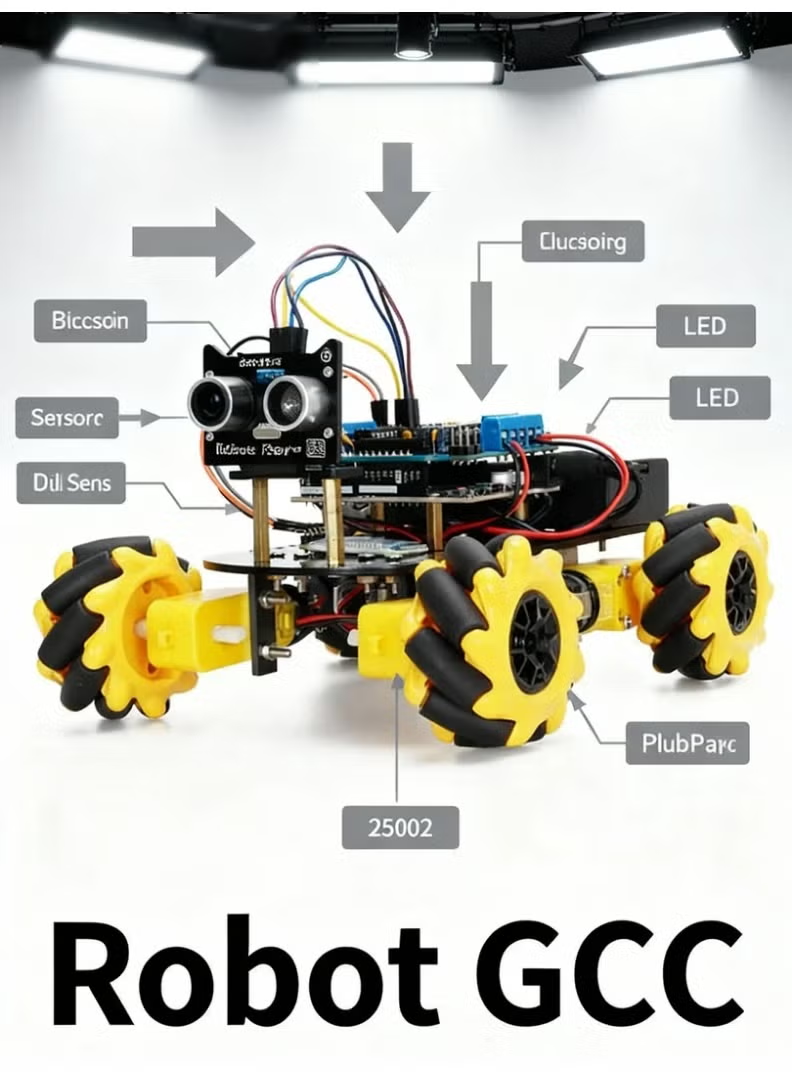

ماشینهای دستی، ریموتی و RC

وسایل نقلیه RC و قطعات

فیگور و مجسمه

اسباببازیهای الکترونیکی

اسباببازیهای مدل و حرکتی

اسباببازیهای مشاغل و اماکن

عروسک و لوازم جانبی

عروسک پارچهای و مخملی

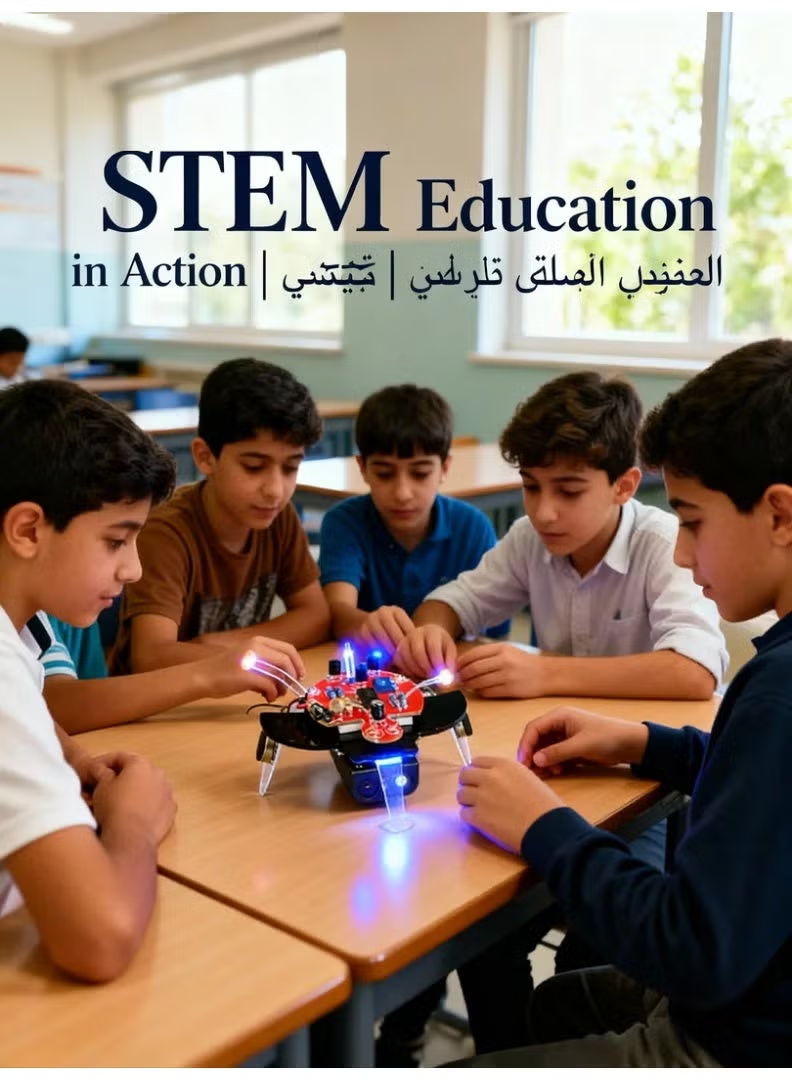

یادگیری و آموزشی

کالای خواب

نگهداری و لوازم کودک

تغذیه و نگهداری کودک

بهداشت و مراقبت

لباس و کفش

پخشکننده MP3-MP4

دوربین عکاسی

لوازم جانبی فیلمبرداری و عکاسی

نورپردازی و استودیو

لنز و لوازم جانبی

ساعت هوشمند

ساعت فیتنس ترکر

تحویل بین 29 آبان الی 08 آذر

تحویل بین 29 آبان الی 08 آذر

گارانتی اصالت و سلامت فیزیکی کالا

گارانتی اصالت و سلامت فیزیکی کالا

ارسال رایگان به سراسر کشور

ارسال رایگان به سراسر کشور